Human-in-the-Loop AI: Why the Future of Intelligence Still Needs Humans

Artificial intelligence is advancing at an unprecedented pace. Models can now write, analyze, predict, generate art, and even reason across complex domains. But as AI systems become more powerful, one truth is becoming clearer—not less: the most effective AI systems are not fully autonomous. They are human-guided.

This is where Human-in-the-Loop (HITL) AI comes in.

What Is Human-in-the-Loop AI?

Human-in-the-Loop refers to AI systems that intentionally incorporate human judgment at key points in the machine learning lifecycle. Instead of replacing humans, HITL designs AI to collaborate with them—leveraging computational scale while preserving human oversight, ethics, and contextual understanding.

Humans may be involved in:

Training and labeling data

Reviewing or correcting model outputs

Making final decisions in high-stakes scenarios

Providing feedback that improves models over time

HITL isn’t a limitation of AI—it’s a design choice.

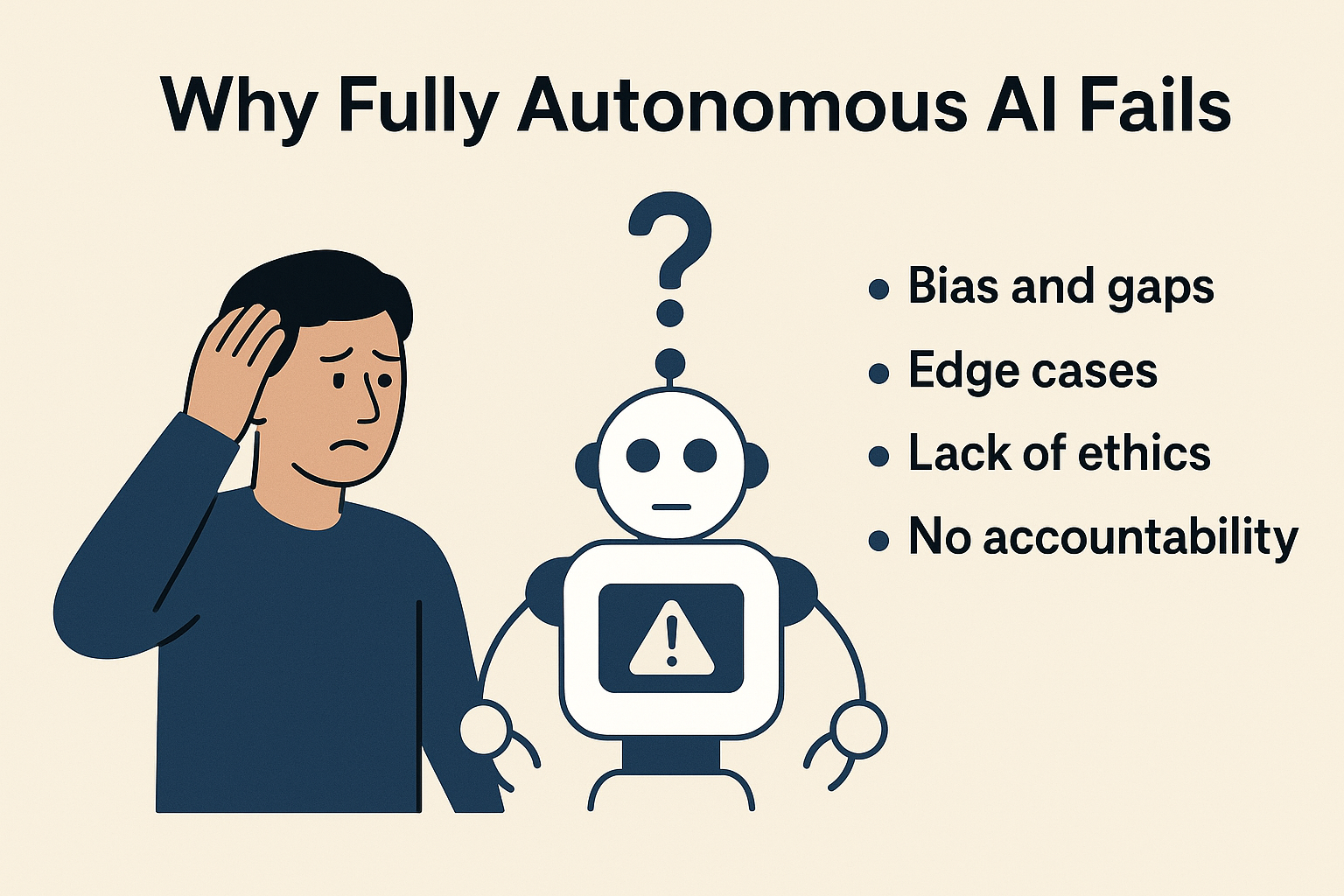

Why Purely Autonomous AI Fails:

Pure automation assumes that the world is stable, predictable, and free of ambiguity. Reality is none of those things.

AI models:

Reflect the data they’re trained on (including bias and gaps)

Struggle with edge cases and novel scenarios

Cannot inherently understand values, ethics, or nuance

Lack accountability and moral responsibility

When AI operates without human intervention, small errors can scale quickly—and silently.

Human-in-the-Loop systems introduce friction where it matters, slowing things down just enough to ensure accuracy, fairness, and trust.

Where HITL Matters Most:

Human-in-the-Loop AI is especially critical in domains where mistakes carry real consequences:

Healthcare: Clinicians validate AI-assisted diagnoses and treatment recommendations

Finance: Humans oversee credit decisions, fraud detection, and risk modeling

Law & Compliance: Legal experts review AI-generated analysis and recommendations

Content & Media: Editors guide generative tools to ensure accuracy and responsibility

Safety & Security: Humans intervene when automated systems flag anomalies

In these environments, AI augments human capability—but humans remain accountable.

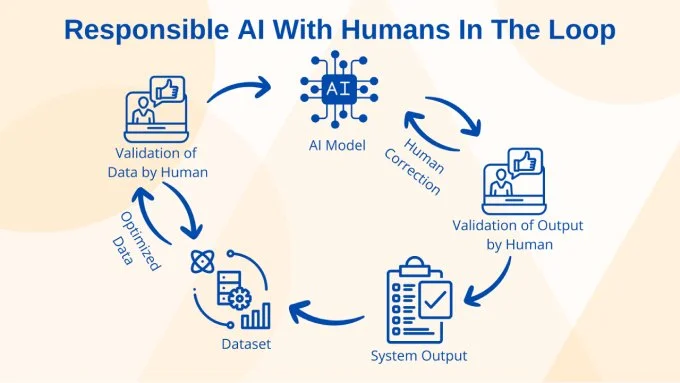

The Feedback Loop That Makes AI Better:

One of the most powerful aspects of HITL is continuous learning.

When humans review, correct, or rate AI outputs, they create high-quality feedback. This feedback:

Improves model performance over time

Reduces hallucinations and errors

Aligns outputs with organizational goals and values

Builds user trust and adoption

In other words, humans don’t just supervise AI—they train it in real time.

HITL Is About Alignment, Not Control:

There’s a misconception that Human-in-the-Loop exists because AI isn’t “good enough yet.” In reality, HITL exists because intelligence without alignment is dangerous.

Humans provide:

Context AI cannot infer

Ethical judgment AI cannot reason about

Intent and meaning beyond statistical patterns

The goal isn’t to micromanage machines—it’s to ensure AI systems serve human outcomes, not just technical efficiency.

Designing for Human-in-the-Loop:

Effective HITL systems are intentionally designed, not patched on later. That means:

Clear decision boundaries between human and machine

Thoughtful UX that makes review efficient, not burdensome

Escalation paths for uncertainty or high-risk outputs

Metrics that value quality and trust—not just speed

The best AI products don’t ask, “How do we remove humans?”

They ask, “Where do humans add the most value?”

The Future Is Collaborative Intelligence:

The future of AI isn’t human versus machine. It’s human plus machine.

Human-in-the-Loop AI recognizes that while machines excel at scale and speed, humans excel at judgment, ethics, creativity, and accountability. Together, they form systems that are not only more powerful—but more responsible.

As AI continues to evolve, the organizations that win won’t be the ones that automate the fastest. They’ll be the ones that design intelligence with humans at the center.